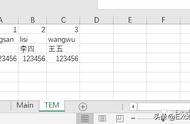

(Stream Analyzer 解析 Nalu Header 示意)

可以看到,SPS_NUT的 Nalu Header 解析后的数据如截图右侧区域显示,感谢 Elecard 开发的这款好用的工具,有了它对我们实现 VPS 解析有很大帮助。

观察 Chromium 的代码结构发现 @Jeffery Kardatzke 大佬已经于 2020 年底完成 Linux 平台 Vappi HEVC 的硬解加速实现,和 H265 Nalu Parse 的大部分逻辑实现,由于 Linux 平台硬解并不需要提取 VPS 参数,因为大佬没有实现 VPS 解析,但根据 Apple Developer 的说明,若我们使用CMVideoFormatDescriptionCreateFromHEVCParameterSets API 创建解码 session,需要提供 VPS, SPS, PPS 三种类型的 Nalu Data,因此实现 macOS 硬解的很大一部分工作即是完成 VPS NALU 的 Header 解析:

首先,参考 T-REC-H.265-202108-I,以及 FFMPEG 定义好的 H265RawVPS Struct Reference,我们需要定义好要解析的 VPS 结构体类型:

// media/video/h265_parser.h

// 定义H265VPS的结构体

struct MEDIA_EXPORT H265VPS {

H265VPS();

int vps_video_parameter_set_id; // 即vps_id,稍后需要用到

bool vps_base_layer_internal_flag;

bool vps_base_layer_available_flag;

int vps_max_layers_minus1;

int vps_max_sub_layers_minus1;

bool vps_temporal_id_nesting_flag;

H265ProfileTierLevel profile_tier_level;

int vps_max_dec_pic_buffering_minus1[kMaxSubLayers]; // 稍后需要用到

int vps_max_num_reorder_pics[kMaxSubLayers]; // 稍后需要用到

int vps_max_latency_increase_plus1[kMaxSubLayers];

int vps_max_layer_id;

int vps_num_layer_sets_minus1;

bool vps_timing_info_present_flag;

// 剩余部分我们不需要,因此暂未实现解析逻辑

};

接着,我们需要完成 H265VPS 的解析逻辑:

// media/video/h265_parser.cc

// 解析VPS逻辑

H265Parser::Result H265Parser::ParseVPS(int* vps_id) {

DVLOG(4) << "Parsing VPS";

Result res = kOk;

DCHECK(vps_id);

*vps_id = -1;

std::unique_ptr<H265VPS> vps = std::make_unique<H265VPS>();

// 读4Bit

READ_BITS_OR_RETURN(4, &vps->vps_video_parameter_set_id);

// 校验读取结果是否为0-16区间内的值

IN_RANGE_OR_RETURN(vps->vps_video_parameter_set_id, 0, 16);

READ_BOOL_OR_RETURN(&vps->vps_base_layer_internal_flag);

READ_BOOL_OR_RETURN(&vps->vps_base_layer_available_flag);

READ_BITS_OR_RETURN(6, &vps->vps_max_layers_minus1);

IN_RANGE_OR_RETURN(vps->vps_max_layers_minus1, 0, 62);

READ_BITS_OR_RETURN(3, &vps->vps_max_sub_layers_minus1);

IN_RANGE_OR_RETURN(vps->vps_max_sub_layers_minus1, 0, 7);

READ_BOOL_OR_RETURN(&vps->vps_temporal_id_nesting_flag);

SKIP_BITS_OR_RETURN(16); // 跳过vps_reserved_0xffff_16bits

res = ParseProfileTierLevel(true, vps->vps_max_sub_layers_minus1,

&vps->profile_tier_level);

if (res != kOk) {

return res;

}

bool vps_sub_layer_ordering_info_present_flag;

READ_BOOL_OR_RETURN(&vps_sub_layer_ordering_info_present_flag);

for (int i = vps_sub_layer_ordering_info_present_flag

? 0

: vps->vps_max_sub_layers_minus1;

i <= vps->vps_max_sub_layers_minus1; i) {

READ_UE_OR_RETURN(&vps->vps_max_dec_pic_buffering_minus1[i]);

IN_RANGE_OR_RETURN(vps->vps_max_dec_pic_buffering_minus1[i], 0, 15);

READ_UE_OR_RETURN(&vps->vps_max_num_reorder_pics[i]);

IN_RANGE_OR_RETURN(vps->vps_max_num_reorder_pics[i], 0,

vps->vps_max_dec_pic_buffering_minus1[i]);

if (i > 0) {

TRUE_OR_RETURN(vps->vps_max_dec_pic_buffering_minus1[i] >=

vps->vps_max_dec_pic_buffering_minus1[i - 1]);

TRUE_OR_RETURN(vps->vps_max_num_reorder_pics[i] >=

vps->vps_max_num_reorder_pics[i - 1]);

}

READ_UE_OR_RETURN(&vps->vps_max_latency_increase_plus1[i]);

}

if (!vps_sub_layer_ordering_info_present_flag) {

for (int i = 0; i < vps->vps_max_sub_layers_minus1; i) {

vps->vps_max_dec_pic_buffering_minus1[i] =

vps->vps_max_dec_pic_buffering_minus1[vps->vps_max_sub_layers_minus1];

vps->vps_max_num_reorder_pics[i] =

vps->vps_max_num_reorder_pics[vps->vps_max_sub_layers_minus1];

vps->vps_max_latency_increase_plus1[i] =

vps->vps_max_latency_increase_plus1[vps->vps_max_sub_layers_minus1];

}

}

READ_BITS_OR_RETURN(6, &vps->vps_max_layer_id);

IN_RANGE_OR_RETURN(vps->vps_max_layer_id, 0, 62);

READ_UE_OR_RETURN(&vps->vps_num_layer_sets_minus1);

IN_RANGE_OR_RETURN(vps->vps_num_layer_sets_minus1, 0, 1023);

*vps_id = vps->vps_video_parameter_set_id;

// 如果存在相同vps_id的vps,则直接替换

active_vps_[*vps_id] = std::move(vps);

return res;

}

// 获取VPS逻辑

const H265VPS* H265Parser::GetVPS(int vps_id) const {

auto it = active_vps_.find(vps_id);

if (it == active_vps_.end()) {

DVLOG(1) << "Requested a nonexistent VPS id " << vps_id;

return nullptr;

}

return it->second.get();

}

完善编写 Unit Test 和 Fuzzer Test:

// media/video/h265_parser_unittest.cc

TEST_F(H265ParserTest, VpsParsing) {

LoadParserFile("bear.hevc");

H265NALU target_nalu;

EXPECT_TRUE(ParseNalusUntilNut(&target_nalu, H265NALU::VPS_NUT));

int vps_id;

EXPECT_EQ(H265Parser::kOk, parser_.ParseVPS(&vps_id));

const H265VPS* vps = parser_.GetVPS(vps_id);

EXPECT_TRUE(!!vps);

EXPECT_TRUE(vps->vps_base_layer_internal_flag);

EXPECT_TRUE(vps->vps_base_layer_available_flag);

EXPECT_EQ(vps->vps_max_layers_minus1, 0);

EXPECT_EQ(vps->vps_max_sub_layers_minus1, 0);

EXPECT_TRUE(vps->vps_temporal_id_nesting_flag);

EXPECT_EQ(vps->profile_tier_level.general_profile_idc, 1);

EXPECT_EQ(vps->profile_tier_level.general_level_idc, 60);

EXPECT_EQ(vps->vps_max_dec_pic_buffering_minus1[0], 4);

EXPECT_EQ(vps->vps_max_num_reorder_pics[0], 2);

EXPECT_EQ(vps->vps_max_latency_increase_plus1[0], 0);

for (int i = 1; i < kMaxSubLayers; i) {

EXPECT_EQ(vps->vps_max_dec_pic_buffering_minus1[i], 0);

EXPECT_EQ(vps->vps_max_num_reorder_pics[i], 0);

EXPECT_EQ(vps->vps_max_latency_increase_plus1[i], 0);

}

EXPECT_EQ(vps->vps_max_layer_id, 0);

EXPECT_EQ(vps->vps_num_layer_sets_minus1, 0);

EXPECT_FALSE(vps->vps_timing_info_present_flag);

}

// media/video/h265_parser_fuzzertest.cc

case media::H265NALU::VPS_NUT:

int vps_id;

res = parser.ParseVPS(&vps_id);

break;

由于 FFMPEG 已经实现了 VPS 的解析逻辑,因此这里大部分逻辑与 FFMPEG 保持一致即可,经过 UnitTest 测试(编译步骤:autoninja -C out/Release64 media_unittests) 确认无问题,对照 StreamAnalyzer 同样无问题后,完成 VPS 解析逻辑实现。

这里跳过 SPS, PPS, SliceHeader 的解析逻辑,因为代码量过大且琐碎,感兴趣可参考 h265_parser.cc(https://source.chromium.org/chromium/chromium/src/ /main:media/video/h265_parser.cc)

计算 POC (Picture Order Count)我们知道 H264 / HEVC 视频帧类型大体上有三种:I 帧,P 帧,B 帧,其中 I 帧又称全帧压缩编码帧,为整个 GOP(一个存在了 I,P,B 的帧组)内的第一帧,解码无需参考其他帧,P 帧又称前向预测编码帧,解码需要参考前面的 I,P 帧解码,B 帧又称双向预测内插编码帧,解码需要参考前面的 I、P 帧和后面的 P 帧。

一共存在的这三种帧,他们在编码时不一定会按顺序写入视频流,因此在解码时为了获取不同帧的正确顺序,需要计算图片的顺序即 POC。

(StreamEye 解析后的 GOP POC 结果示意)

如上图 StreamEye 解析结果所示,POC 呈现:0 -> 4 -> 2 -> 1 -> 3 -> 8 -> 6 ... 规律。

不同帧的出现顺序对于解码来说至关重要,因此我们需要在不同帧解码后对帧按 POC 重新排序,最终确保解码图像按照实际顺序呈现给用户:0 -> 1 -> 2 -> 3 -> 4 -> 5 -> 6 -> 7 -> 8。

苹果的 VideoToolbox 并不会给我们实现这部分逻辑,因此我们需要自行计算 POC 顺序,并在之后重排序,代码实现如下:

// media/video/h265_poc.h

// Copyright 2022 The Chromium Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#ifndef MEDIA_VIDEO_H265_POC_H_

#define MEDIA_VIDEO_H265_POC_H_

#include <stdint.h>

#include "third_party/abseil-cpp/absl/types/optional.h"

namespace media {

struct H265SPS;

struct H265PPS;

struct H265SliceHeader;

class MEDIA_EXPORT H265POC {

public:

H265POC();

H265POC(const H265POC&) = delete;

H265POC& operator=(const H265POC&) = delete;

~H265POC();

// 根据SPS和PPS以及解析好的SliceHeader信息计算POC

int32_t ComputePicOrderCnt(const H265SPS* sps,

const H265PPS* pps,

const H265SliceHeader& slice_hdr);

void Reset();

private:

int32_t ref_pic_order_cnt_msb_;

int32_t ref_pic_order_cnt_lsb_;

// 是否为解码过程的首张图

bool first_picture_;

};

} // namespace media

#endif // MEDIA_VIDEO_H265_POC_H_

POC 的计算逻辑:

// media/video/h265_poc.cc

// Copyright 2022 The Chromium Authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

#include <stddef.h>

#include <algorithm>

#include "base/cxx17_backports.h"

#include "base/logging.h"

#include "media/video/h265_parser.h"

#include "media/video/h265_poc.h"

namespace media {

H265POC::H265POC() {

Reset();

}

H265POC::~H265POC() = default;

void H265POC::Reset() {

ref_pic_order_cnt_msb_ = 0;

ref_pic_order_cnt_lsb_ = 0;

first_picture_ = true;

}

// 如下逻辑所示,我们需要按照HEVC Spec的规范计算POC

//(这里我参考了Jeffery Kardatzke在H265Decoder的实现逻辑)

int32_t H265POC::ComputePicOrderCnt(const H265SPS* sps,

const H265PPS* pps,

const H265SliceHeader& slice_hdr) {

int32_t pic_order_cnt = 0;

int32_t max_pic_order_cnt_lsb =

1 << (sps->log2_max_pic_order_cnt_lsb_minus4 4);

int32_t pic_order_cnt_msb;

int32_t no_rasl_output_flag;

// Calculate POC for current picture.

if (slice_hdr.irap_pic) {

// 8.1.3

no_rasl_output_flag = (slice_hdr.nal_unit_type >= H265NALU::BLA_W_LP &&

slice_hdr.nal_unit_type <= H265NALU::IDR_N_LP) ||

first_picture_;

} else {

no_rasl_output_flag = false;

}

if (!slice_hdr.irap_pic || !no_rasl_output_flag) {

int32_t prev_pic_order_cnt_lsb = ref_pic_order_cnt_lsb_;

int32_t prev_pic_order_cnt_msb = ref_pic_order_cnt_msb_;

if ((slice_hdr.slice_pic_order_cnt_lsb < prev_pic_order_cnt_lsb) &&

((prev_pic_order_cnt_lsb - slice_hdr.slice_pic_order_cnt_lsb) >=

(max_pic_order_cnt_lsb / 2))) {

pic_order_cnt_msb = prev_pic_order_cnt_msb max_pic_order_cnt_lsb;

} else if ((slice_hdr.slice_pic_order_cnt_lsb > prev_pic_order_cnt_lsb) &&

((slice_hdr.slice_pic_order_cnt_lsb - prev_pic_order_cnt_lsb) >

(max_pic_order_cnt_lsb / 2))) {

pic_order_cnt_msb = prev_pic_order_cnt_msb - max_pic_order_cnt_lsb;

} else {

pic_order_cnt_msb = prev_pic_order_cnt_msb;

}

} else {

pic_order_cnt_msb = 0;

}

// 8.3.1 Decoding process for picture order count.

if (!pps->temporal_id && (slice_hdr.nal_unit_type < H265NALU::RADL_N ||

slice_hdr.nal_unit_type > H265NALU::RSV_VCL_N14)) {

ref_pic_order_cnt_lsb_ = slice_hdr.slice_pic_order_cnt_lsb;

ref_pic_order_cnt_msb_ = pic_order_cnt_msb;

}

pic_order_cnt = pic_order_cnt_msb slice_hdr.slice_pic_order_cnt_lsb;

first_picture_ = false;

return pic_order_cnt;

}

} // namespace media

计算 MaxReorderCount

计算 POC 并解码后,为了确保视频帧按照正确的顺序展示给用户,需要对视频帧进行 Reorder 重排序,我们可以观察 H264 的最大 Reorder 数计算逻辑,发现很复杂:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

// H264最大Reorder数的计算逻辑

int32_t ComputeH264ReorderWindow(const H264SPS* sps) {

// When |pic_order_cnt_type| == 2, decode order always matches presentation

// order.

// TODO(sandersd): For |pic_order_cnt_type| == 1, analyze the delta cycle to

// find the minimum required reorder window.

if (sps->pic_order_cnt_type == 2)

return 0;

int max_dpb_mbs = H264LevelToMaxDpbMbs(sps->GetIndicatedLevel());

int max_dpb_frames =

max_dpb_mbs / ((sps->pic_width_in_mbs_minus1 1) *

(sps->pic_height_in_map_units_minus1 1));

max_dpb_frames = std::clamp(max_dpb_frames, 0, 16);

// See AVC spec section E.2.1 definition of |max_num_reorder_frames|.

if (sps->vui_parameters_present_flag && sps->bitstream_restriction_flag) {

return std::min(sps->max_num_reorder_frames, max_dpb_frames);

} else if (sps->constraint_set3_flag) {

if (sps->profile_idc == 44 || sps->profile_idc == 86 ||

sps->profile_idc == 100 || sps->profile_idc == 110 ||

sps->profile_idc == 122 || sps->profile_idc == 244) {

return 0;

}

}

return max_dpb_frames;

}

幸运的是 HEVC 相比 H264 不需要如此繁杂的计算,HEVC 在编码时已经提前将最大 Reorder 数算好了,我们只需按如下方式获取:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

// HEVC最大Reorder数的计算逻辑

#if BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

int32_t ComputeHEVCReorderWindow(const H265VPS* vps) {

int32_t vps_max_sub_layers_minus1 = vps->vps_max_sub_layers_minus1;

return vps->vps_max_num_reorder_pics[vps_max_sub_layers_minus1];

}

#endif // BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

计算好 Reorder 数和 POC 后,继续复用 H264 的 Reorder 逻辑,即可正确完成排序。

提取并缓存 SPS / PPS / VPS下面我们正式开始解码逻辑实现,首先,需要提取 SPS / PPS / VPS,并对其解析,缓存:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

switch (nalu.nal_unit_type) {

// 跳过

...

// 解析SPS

case H265NALU::SPS_NUT: {

int sps_id = -1;

result = hevc_parser_.ParseSPS(&sps_id);

if (result == H265Parser::kUnsupportedStream) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Unsupported SPS");

NotifyError(PLATFORM_FAILURE, SFT_UNSUPPORTED_STREAM);

return;

}

if (result != H265Parser::kOk) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Could not parse SPS");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 按照sps_id缓存SPS的nalu data

seen_sps_[sps_id].assign(nalu.data, nalu.data nalu.size);

break;

}

// 解析PPS

case H265NALU::PPS_NUT: {

int pps_id = -1;

result = hevc_parser_.ParsePPS(nalu, &pps_id);

if (result == H265Parser::kUnsupportedStream) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Unsupported PPS");

NotifyError(PLATFORM_FAILURE, SFT_UNSUPPORTED_STREAM);

return;

}

if (result == H265Parser::kMissingParameterSet) {

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Missing SPS from what was parsed");

NotifyError(PLATFORM_FAILURE, SFT_INVALID_STREAM);

return;

}

if (result != H265Parser::kOk) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Could not parse PPS");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 按照pps_id缓存PPS的nalu data

seen_pps_[pps_id].assign(nalu.data, nalu.data nalu.size);

// 将PPS同样作为提交到VT的一部分,这可以解决同一个GOP下不同帧引用不同PPS的问题

nalus.push_back(nalu);

data_size = kNALUHeaderLength nalu.size;

break;

}

// 解析VPS

case H265NALU::VPS_NUT: {

int vps_id = -1;

result = hevc_parser_.ParseVPS(&vps_id);

if (result == H265Parser::kUnsupportedStream) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Unsupported VPS");

NotifyError(PLATFORM_FAILURE, SFT_UNSUPPORTED_STREAM);

return;

}

if (result != H265Parser::kOk) {

WriteToMediaLog(MediaLogMessageLevel::kERROR, "Could not parse VPS");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 按照vps_id缓存VPS的nalu data

seen_vps_[vps_id].assign(nalu.data, nalu.data nalu.size);

break;

}

// 跳过

...

}

创建解码 Format 和 Session

根据解析后的 VPS,SPS,PPS,我们可以创建解码 Format:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

#if BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

// 使用vps,sps,pps创建解码Format(CMFormatDescriptionRef)

base::ScopedCFTypeRef<CMFormatDescriptionRef> CreateVideoFormatHEVC(

const std::vector<uint8_t>& vps,

const std::vector<uint8_t>& sps,

const std::vector<uint8_t>& pps) {

DCHECK(!vps.empty());

DCHECK(!sps.empty());

DCHECK(!pps.empty());

// Build the configuration records.

std::vector<const uint8_t*> nalu_data_ptrs;

std::vector<size_t> nalu_data_sizes;

nalu_data_ptrs.reserve(3);

nalu_data_sizes.reserve(3);

nalu_data_ptrs.push_back(&vps.front());

nalu_data_sizes.push_back(vps.size());

nalu_data_ptrs.push_back(&sps.front());

nalu_data_sizes.push_back(sps.size());

nalu_data_ptrs.push_back(&pps.front());

nalu_data_sizes.push_back(pps.size());

// 这里有一个关键点,即,在一个 GOP 内可能存在 >= 2 的引用情况、

// 比如I帧引用了 pps_id 为 0 的 pps,P帧引用了 pps_id 为 1 的 pps

// 这种场景经过本人测试,解决方法有两个:

// 方法1:把两个PPS都传进来,以此创建 CMFormatDescriptionRef(此时nalu_data_ptrs数组长度为4)

// 方法2(本文选用的方法):仍然只传一个PPS,但把 PPS 的 Nalu Data 提交到 VT,VT 会自动查找到PPS的引用关系,并处理这种情况,见"vt_video_decode_accelerator_mac.cc;l=1380"

base::ScopedCFTypeRef<CMFormatDescriptionRef> format;

if (__builtin_available(macOS 11.0, *)) {

OSStatus status = CMVideoFormatDescriptionCreateFromHEVCParameterSets(

kCFAllocatorDefault,

nalu_data_ptrs.size(), // parameter_set_count

&nalu_data_ptrs.front(), // ¶meter_set_pointers

&nalu_data_sizes.front(), // ¶meter_set_sizes

kNALUHeaderLength, // nal_unit_header_length

extensions, format.InitializeInto());

OSSTATUS_LOG_IF(WARNING, status != noErr, status)

<< "CMVideoFormatDescriptionCreateFromHEVCParameterSets()";

}

return format;

}

#endif // BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

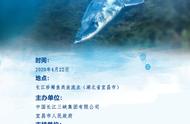

(VideoToolbox 的解码流程,图片来自 Apple)

在创建解码 Format 后,继续创建解码 Session:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

bool VTVideoDecodeAccelerator::ConfigureDecoder() {

DVLOG(2) << __func__;

DCHECK(decoder_task_runner_->RunsTasksInCurrentSequence());

base::ScopedCFTypeRef<CMFormatDescriptionRef> format;

switch (codec_) {

case VideoCodec::kH264:

format = CreateVideoFormatH264(active_sps_, active_spsext_, active_pps_);

break;

#if BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

case VideoCodec::kHEVC:

// 创建CMFormatDescriptionRef

format = CreateVideoFormatHEVC(active_vps_, active_sps_, active_pps_);

break;

#endif // BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

case VideoCodec::kVP9:

format = CreateVideoFormatVP9(

cc_detector_->GetColorSpace(config_.container_color_space),

config_.profile, config_.hdr_metadata,

cc_detector_->GetCodedSize(config_.initial_expected_coded_size));

break;

default:

NOTREACHED() << "Unsupported codec.";

}

if (!format) {

NotifyError(PLATFORM_FAILURE, SFT_PLATFORM_ERROR);

return false;

}

if (!FinishDelayedFrames())

return false;

format_ = format;

session_.reset();

// 利用创建好的解码format创建解码session

// 如果是VP9,则强制请求硬解解码

// 如果是HEVC,由于一些可能的原因,我们选择不强制硬解解码(让VT自己选最适合的解码方式)

// 可能的原因有:

// 1. GPU不支持硬解(此时我们希望使用VT软解)

// 2. 解码的Profile不受支持(比如M1支持HEVC Rext硬解,而Intel/AMD GPU不支持,此时希望软解)

// 3. GPU繁忙,资源不足,此时希望软解

const bool require_hardware = config_.profile == VP9PROFILE_PROFILE0 ||

config_.profile == VP9PROFILE_PROFILE2;

// 可能是HDR视频,因此希望输出pix_fmt是

// kCVPixelFormatType_420YpCbCr10BiPlanarVideoRange

const bool is_hbd = config_.profile == VP9PROFILE_PROFILE2 ||

config_.profile == HEVCPROFILE_MAIN10 ||

config_.profile == HEVCPROFILE_REXT;

// 创建解码Session

if (!CreateVideoToolboxSession(format_, require_hardware, is_hbd, &callback_,

&session_, &configured_size_)) {

NotifyError(PLATFORM_FAILURE, SFT_PLATFORM_ERROR);

return false;

}

// Report whether hardware decode is being used.

bool using_hardware = false;

base::ScopedCFTypeRef<CFBooleanRef> cf_using_hardware;

if (VTSessionCopyProperty(

session_,

// kVTDecompressionPropertyKey_UsingHardwareAcceleratedVideoDecoder

CFSTR("UsingHardwareAcceleratedVideoDecoder"), kCFAllocatorDefault,

cf_using_hardware.InitializeInto()) == 0) {

using_hardware = CFBooleanGetValue(cf_using_hardware);

}

UMA_HISTOGRAM_BOOLEAN("Media.VTVDA.HardwareAccelerated", using_hardware);

if (codec_ == VideoCodec::kVP9 && !vp9_bsf_)

vp9_bsf_ = std::make_unique<VP9SuperFrameBitstreamFilter>();

// Record that the configuration change is complete.

#if BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

configured_vps_ = active_vps_;

#endif // BUILDFLAG(ENABLE_HEVC_PARSER_AND_HW_DECODER)

configured_sps_ = active_sps_;

configured_spsext_ = active_spsext_;

configured_pps_ = active_pps_;

return true;

}

创建解码 Session 的逻辑:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

// 利用CMFormatDescriptionRef创建VTDecompressionSession

bool CreateVideoToolboxSession(

const CMFormatDescriptionRef format,

bool require_hardware,

bool is_hbd,

const VTDecompressionOutputCallbackRecord* callback,

base::ScopedCFTypeRef<VTDecompressionSessionRef>* session,

gfx::Size* configured_size) {

// Prepare VideoToolbox configuration dictionaries.

base::ScopedCFTypeRef<CFMutableDictionaryRef> decoder_config(

CFDictionaryCreateMutable(kCFAllocatorDefault,

1, // capacity

&kCFTypeDictionaryKeyCallBacks,

&kCFTypeDictionaryValueCallBacks));

if (!decoder_config) {

DLOG(ERROR) << "Failed to create CFMutableDictionary";

return false;

}

CFDictionarySetValue(

decoder_config,

kVTVideoDecoderSpecification_EnableHardwareAcceleratedVideoDecoder,

kCFBooleanTrue);

CFDictionarySetValue(

decoder_config,

kVTVideoDecoderSpecification_RequireHardwareAcceleratedVideoDecoder,

require_hardware ? kCFBooleanTrue : kCFBooleanFalse);

CGRect visible_rect = CMVideoFormatDescriptionGetCleanAperture(format, true);

CMVideoDimensions visible_dimensions = {

base::ClampFloor(visible_rect.size.width),

base::ClampFloor(visible_rect.size.height)};

base::ScopedCFTypeRef<CFMutableDictionaryRef> image_config(

BuildImageConfig(visible_dimensions, is_hbd));

if (!image_config) {

DLOG(ERROR) << "Failed to create decoder image configuration";

return false;

}

// 创建解码Session的最终逻辑

OSStatus status = VTDecompressionSessionCreate(

kCFAllocatorDefault,

format, // 我们创建好的CMFormatDescriptionRef

decoder_config, // video_decoder_specification

image_config, // destination_image_buffer_attributes

callback, // output_callback

session->InitializeInto());

if (status != noErr) {

OSSTATUS_DLOG(WARNING, status) << "VTDecompressionSessionCreate()";

return false;

}

*configured_size =

gfx::Size(visible_rect.size.width, visible_rect.size.height);

return true;

}

提取视频帧并解码

这一步开始我们就要开始正式解码了,解码前首先需要提取视频帧的 SliceHeader,并从缓存中拿到到该帧引用的 SPS,PPS,VPS,计算 POC 和最大 Reorder 数。

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

switch (nalu.nal_unit_type) {

case H265NALU::BLA_W_LP:

case H265NALU::BLA_W_RADL:

case H265NALU::BLA_N_LP:

case H265NALU::IDR_W_RADL:

case H265NALU::IDR_N_LP:

case H265NALU::TRAIL_N:

case H265NALU::TRAIL_R:

case H265NALU::TSA_N:

case H265NALU::TSA_R:

case H265NALU::STSA_N:

case H265NALU::STSA_R:

case H265NALU::RADL_N:

case H265NALU::RADL_R:

case H265NALU::RASL_N:

case H265NALU::RASL_R:

case H265NALU::CRA_NUT: {

// 针对视频帧提取SliceHeader

curr_slice_hdr.reset(new H265SliceHeader());

result = hevc_parser_.ParseSliceHeader(nalu, curr_slice_hdr.get(),

last_slice_hdr.get());

if (result == H265Parser::kMissingParameterSet) {

curr_slice_hdr.reset();

last_slice_hdr.reset();

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Missing PPS when parsing slice header");

continue;

}

if (result != H265Parser::kOk) {

curr_slice_hdr.reset();

last_slice_hdr.reset();

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Could not parse slice header");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 这里是一个Workaround,一些iOS设备拍摄的视频如果在Seek过程首个关键帧是CRA帧,

// 那么下一个帧如果是一个RASL帧,则会立即报kVTVideoDecoderBadDataErr的错误,

// 因此我们需要判断总输出帧数是否大于5,否则跳过这些RASL帧

if (output_count_for_cra_rasl_workaround_ < kMinOutputsBeforeRASL &&

(nalu.nal_unit_type == H265NALU::RASL_N ||

nalu.nal_unit_type == H265NALU::RASL_R)) {

continue;

}

// 根据SliceHeader内的pps_id,拿到缓存的pps nalu data

const H265PPS* pps =

hevc_parser_.GetPPS(curr_slice_hdr->slice_pic_parameter_set_id);

if (!pps) {

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Missing PPS referenced by slice");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 根据PPS内的sps_id,拿到缓存的sps nalu data

const H265SPS* sps = hevc_parser_.GetSPS(pps->pps_seq_parameter_set_id);

if (!sps) {

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Missing SPS referenced by PPS");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 根据VPS内的vps_id,拿到缓存的vps nalu data

const H265VPS* vps =

hevc_parser_.GetVPS(sps->sps_video_parameter_set_id);

if (!vps) {

WriteToMediaLog(MediaLogMessageLevel::kERROR,

"Missing VPS referenced by SPS");

NotifyError(UNREADABLE_INPUT, SFT_INVALID_STREAM);

return;

}

// 记录一下当前激活的sps/vps/pps

DCHECK(seen_pps_.count(curr_slice_hdr->slice_pic_parameter_set_id));

DCHECK(seen_sps_.count(pps->pps_seq_parameter_set_id));

DCHECK(seen_vps_.count(sps->sps_video_parameter_set_id));

active_vps_ = seen_vps_[sps->sps_video_parameter_set_id];

active_sps_ = seen_sps_[pps->pps_seq_parameter_set_id];

active_pps_ = seen_pps_[curr_slice_hdr->slice_pic_parameter_set_id];

// 计算POC

int32_t pic_order_cnt =

hevc_poc_.ComputePicOrderCnt(sps, pps, *curr_slice_hdr.get());

frame->has_slice = true;

// 是否为IDR(这里其实为IRAP)

frame->is_idr = nalu.nal_unit_type >= H265NALU::BLA_W_LP &&

nalu.nal_unit_type <= H265NALU::RSV_IRAP_VCL23;

frame->pic_order_cnt = pic_order_cnt;

// 计算最大Reorder数

frame->reorder_window = ComputeHEVCReorderWindow(vps);

// 存储上一帧的SliceHeader

last_slice_hdr.swap(curr_slice_hdr);

curr_slice_hdr.reset();

[[fallthrough]];

}

default:

nalus.push_back(nalu);

data_size = kNALUHeaderLength nalu.size;

break;

}

检测视频参数是否发生变化

(H264 视频帧的 SPS、PPS 引用关系,图片来自 Apple)

如果视频帧引用的 VPS,PPS,SPS 任一发生变化,则按照 VideoToolbox 的要求,需要重新配置解码 Session:

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

if (frame->is_idr &&

(configured_vps_ != active_vps_ || configured_sps_ != active_sps_ ||

configured_pps_ != active_pps_)) {

// 这里是一些校验逻辑

...

// 这里重新创建解码format,并重新配置解码session

if (!ConfigureDecoder()) {

return;

}

}

设置输出视频帧的目标像素格式

鉴于我们需要支持 HDR,因此需要判断一下视频是否为 Main10 / Rext Profile,并调整输出为gfx::BufferFormat::P010

// media/gpu/mac/vt_video_decode_accelerator_mac.cc

const gfx::BufferFormat buffer_format =

config_.profile == VP9PROFILE_PROFILE2 ||

config_.profile == HEVCPROFILE_MAIN10 ||

config_.profile == HEVCPROFILE_REXT

? gfx::BufferFormat::P010

: gfx::BufferFormat::YUV_420_BIPLANAR;

总结

在上述步骤后,硬解关键流程基本完工,目前代码已合入 Chromium 104(main 分支),macOS 平台具体实现过程和代码 Diff 可以追溯 Crbug(https://bugs.chromium.org/p/chromium/issues/detail?id=1300444)。

Windows 的硬解有了 macOS 硬解的开发经验,尝试 Windows 硬解相对变得容易了一些,尽管也踩了一些坑。

Media Foundation 方案的尝试文章开头已经介绍了,实际上在 Windows 平台,如果你可以安装 HEVC视频扩展,则是可以在 Edge 浏览器硬解 HEVC 的,因此我最初的思路也是和 Edge 一样,通过引导 HEVC视频扩展,完成硬解支持。

首先,使用 Edge,打开任意 HEVC 视频,发现,Edge 使用 VDAVideoDecoder 进行 HEVC 硬解: