安装 kubeadm

1.添加 kubernetes 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.执行如下命令安装kubelet kubeadm kubectl

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet

3.执行查看kubeadm 的 images

kubeadm config images list

可以看到如下信息:

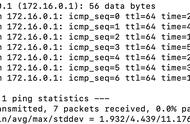

W0815 09:36:13.251611 44413 version.go:98] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

W0815 09:36:13.251730 44413 version.go:99] falling back to the local client version: v1.15.2

k8s.gcr.io/kube-apiserver:v1.15.2

k8s.gcr.io/kube-controller-manager:v1.15.2

k8s.gcr.io/kube-scheduler:v1.15.2

k8s.gcr.io/kube-proxy:v1.15.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

4.由于 k8s.gcr.io被墙,所以,我们可以使用阿里云的源来安装,将如下信息保存为k8s_images_install.sh 后运行脚本下载镜像。

images=(

kube-apiserver:v1.15.2

kube-controller-manager:v1.15.2

kube-scheduler:v1.15.2

kube-proxy:v1.15.2

pause:3.1

etcd:3.3.10

coredns:1.3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

5.然后执行如下命令安装 kubeadm。

kubeadm init --kubernetes-version=v1.15.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

安装完毕会提示:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.89:6443 --token linp38.sdzgoaw3jvrnr5yq \

--discovery-token-ca-cert-hash sha256:c8c4d08806ec22851c0eaa4e41962576b19d372e92f638a88e89f166e2a2c4af

注意这里的——

kubeadm join 192.168.10.89:6443 --token linp38.sdzgoaw3jvrnr5yq \

--discovery-token-ca-cert-hash sha256:c8c4d08806ec22851c0eaa4e41962576b19d372e92f638a88e89f166e2a2c4af

信息我们保存下来,稍后会在 node 节点使用到,我们根据提示执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Kubernetes 集群默认需要加密方式访问。所以,这几条命令,就是将刚刚部署生成的 Kubernetes 集群的安全配置文件,保存到当前用户的.kube 目录下,kubectl 默认会使用这个目录下的授权信息访问 Kubernetes 集群。

然后添加网络插件,否则CoreDNS、kube-controller-manager 等依赖于网络的 Pod 都处于 Pending 状态,会调度失败。如下所示:

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5c98db65d4-5jntb 0/1 Pending 0 2m15s

kube-system coredns-5c98db65d4-db2xj 0/1 Pending 0 2m15s

kube-system etcd-k8s-1.localdomain 1/1 Running 0 81s

kube-system kube-apiserver-k8s-1.localdomain 1/1 Running 0 99s

kube-system kube-controller-manager-k8s-1.localdomain 1/1 Running 0 86s

kube-system kube-flannel-ds-amd64-pc4kp 1/1 Running 0 32s

kube-system kube-proxy-6qwv6 1/1 Running 0 2m15s

kube-system kube-scheduler-k8s-1.localdomain 1/1 Running 0 77s

我们安装 flannel 插件:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/bc79dd1505b0c8681ece4de4c0d86c5cd2643275/Documentation/kube-flannel.yml

然后我们查看 pod 状态,发现 STATUS 都未 Running。

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5c98db65d4-5jntb 1/1 Running 0 26m

kube-system coredns-5c98db65d4-db2xj 1/1 Running 0 26m

kube-system etcd-k8s-1.localdomain 1/1 Running 0 25m

kube-system kube-apiserver-k8s-1.localdomain 1/1 Running 0 25m

kube-system kube-controller-manager-k8s-1.localdomain 1/1 Running 0 25m

kube-system kube-flannel-ds-amd64-9tscr 1/1 Running 0 16m

kube-system kube-flannel-ds-amd64-pc4kp 1/1 Running 0 24m

kube-system kube-flannel-ds-amd64-tts29 1/1 Running 0 17m

kube-system kube-proxy-64f8t 1/1 Running 0 16m

kube-system kube-proxy-6qwv6 1/1 Running 0 26m

kube-system kube-proxy-js7bb 1/1 Running 0 17m

kube-system kube-scheduler-k8s-1.localdomain 1/1 Running 0 25m

Node 节点配置

接下来我们分别配置 2 台 node 节点,以下配置分别在 2 台 node 上执行。

1.添加 kubernetes 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.执行

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet

3.安装 node 节点所需的组件,由于 node 节点不需要 api-server 和 controller-manager、scheduler、etcd,我们只需要安装 kube-proxy、pause、coredns 即可。

images=(

kube-proxy:v1.15.2

pause:3.1

coredns:1.3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

然后执行 master 节点我们执行 kubeadm init 后得到join 信息将 node 节点加入到集群:

kubeadm join 10.168.0.2:6443 --token 00bwbx.uvnaa2ewjflwu1ry --discovery-token-ca-cert-hash sha256:00eb62a2a6020f94132e3fe1ab721349bbcd3e9b94da9654cfe15f2985ebd711

然后在 master 执行如下命令查看 nodes 信息:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-1.localdomain Ready master 10m v1.15.2

k8s-2.localdomain Ready <none> 89s v1.15.2

k8s-3.localdomain Ready <none> 67s v1.15.2

问题排障

1.node 节点执行 kubectl get nodes 提示:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

kubectl命令是通过kube-apiserver接口进行集群管理。该命令可以在Master节点上运行是因为kube-apiserver处于工作状态,而在Node节点上只有kube-proxy和kubelet处于工作状态,kubectl命令其实不是为Node节点的主机准备的,而是应该运行在一个Client主机上:如K8s-Master节点的非root用户。当我们kubeadm init success后,系统会提示我们将admin.conf文件保存到Client主机上:

# cat .kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRFNU1EZ3hOVEF5TWpneU1sb1hEVEk1TURneE1qQXlNamd5TWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTXpMClQycDJWamsyOVB4elBjSk5wSHM1QWxsUVIxNy8zMWRUNXJSTytVSkVFVzI2UC9xc3ZZSWE3U01pYi9IamNON1gKM1JwelMrVzNqVXVQd29MMlRuUkREYkNsSldLdHJ2S1lud2hKTXVYdU5ZWVAzbFNrSFpJQ3hSVkFBaDB5Mzh1bwpjaW9CRGMyQTkzQldqQzBWc2QrdC9EbFJwL0RESGk0dzR1bjVRbVFlbnBhZk14TzJIazVQa2g2Sm9DaWZOamN3CkhadGxMNnZwZi9FMDJiQXh1eGkrV1IwQUxxMG44Z1p5M3huRk5lWHczM2wwOCtiVVJPVDFKbnFKaGh1bEJPdTcKSUI5VWZEeXc0VzhsbEZYRWRrdHhWL214ZWxQbFl6cXduTGN4eFVqUE80cmNBak5YZnFuY1M1UmFvNCt5TnV1NwpKbGR1RytXamRwaGZuRUVHQ2kwQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdVl1ZTVDU3VkN1BZTzh1Zlh2a0k3Sk1VVzMKdnZjTDVDd3N5MXgwYWxxSUlxSXR4YS90UDRpbXhaQVJoTU1mVE85S3plcGpYd2VVelNTS1JoUWY4S2ZPUTJOeQpFaUJKMHp5VmprcmdUblFlT0xPQWE1TEFyNThBd1ZGWis1aFFNZk9SWENmZ004MVBNVC82NVovQUxQeXozWndICklTd0Noa3JTK2ZhUUhqWGh1M1ZoQ283VlNuU0E0dFF4eGx1Z3RiQWU3Nm1sSXJ0b3o2czd1aEFuN09FbWdYNUIKNjBIN1VGL2VsNzRwVVZjVGszN2haRTRRMWxzNXpDMUlCck04YmFEODQyWTA1SE1zRE5TZnhlR3Iyb0RQWWt6SwprQk9OMkZoOGptODlldXFic3NGckMxZ1BNSE5FaXc4RUltM056bkw1NmJ2MGtYViszQThZTWtKYUU4ST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.10.89:6443

name: kubernetes

……

当Client使用该config文件启动kubelet后,他将访问Master节点的6443端口获得数据(Master 6443端口是处于LISTEN状态的),而非localhost:8080端口(因为Node节点无法找到该config文件)。

我们也可以把Client客户端放在其他主机中,甚至Node节点。只要将该config文件按照系统提示方式添加到Client客户端中即可。我们使用scp命令将文件发送至目标主机:

scp -r .kube/ node1:/root

scp -r .kube/ node2:/root

即可。

【End】