页面表格中找到Server Binaries中的kubernetes-server-linux-amd64.tar.gz文件,下载到本地。该压缩包中包括了k8s需要运行的全部服务程序文件

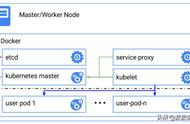

2.2Master安装

2.2.1Docker安装

(1)设置yum源

vi /etc/yum.repos.d/docker.repo [dockerrepo] name=Docker Repository baseurl=https://yum.dockerproject.org/repo/main/centos/$releasever/ enabled=1 gpgcheck=1 gpgkey=https://yum.dockerproject.org/gpg

(2)安装docker

yum install docker-engine

(3)安装后查看docker版本

docker -v

2.2.2etcd服务

etcd做为Kubernetes集群的主要服务,在安装Kubernetes各服务前需要首先安装和启动。

下载etcd二进制文件

https://github.com/etcd-io/etcd/releases

上传到master

可以使用lrzsz,如果没有安装,可以通过yum进行安装 yum install lrzsz

将将etcd和etcdctl文件复制到/usr/bin目录

配置systemd服务文件 /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Server After=network.target [Service] Type=simple EnvironmentFile=-/etc/etcd/etcd.conf WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd Restart=on-failure [Install] WantedBy=multi-user.target

启动与测试etcd服务

systemctl daemon-reload systemctl enable etcd.servic e mkdir -p /var/lib/etcd/ systemctl start etcd.service etcdctl cluster-health

2.2.3kube-apiserver服务

解压后将kube-apiserver、kube-controller-manager、kube-scheduler以及管理要使用的kubectl二进制命令文件放到/usr/bin目录,即完成这几个服务的安装。

cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/bin/

下面是对kube-apiserver服务进行配置

编辑systemd服务文件 vi /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Serve r Documentation=https://github.com/kubernetes/kubernetes After=etcd.service Wants=etcd.service [Service] EnvironmentFile=/etc/kubernetes/apiserver ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS Restart=on-failure Type=notify [Install] WantedBy=multi-user.target

配置文件

创建目录:mkdir /etc/kubernetes

vi /etc/kubernetes/apiserver

KUBE_API_ARGS="--storage-backend=etcd3 --etcd-servers=http://127.0.0.1:2379 --insecure-bind- address=0.0.0.0 --insecure-port=8080 --service-cluster-ip-range=169.169.0.0/16 --service-node- port-range=1-65535 --admission- control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,Defaul tStorageClass,ResourceQuota --logtostderr=true --log-dir=/var/log/kubernetes --v=2"

2.2.4kube-controller-manager服务

kube-controller-manager服务依赖于kube-apiserver服务:

配置systemd服务文件:vi /usr/lib/systemd/system/kube-controller-manager.servic

[Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=kube-apiserver.service Requires=kube-apiserver.service [Service] EnvironmentFile=-/etc/kubernetes/controller-manager ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

配置文件 vi /etc/kubernetes/controller-manager

KUBE_CONTROLLER_MANAGER_ARGS="--master=http://192.168.126.140:8080 --logtostderr=true --log- dir=/var/log/kubernetes --v=2"

2.2.5kube-scheduler服务

kube-scheduler服务也依赖于kube-apiserver服务。

配置systemd服务文件:vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/etc/kubernetes/scheduler ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

配置文件:vi /etc/kubernetes/scheduler

KUBE_SCHEDULER_ARGS="--master=http://192.168.126.140:8080 --logtostderr=true --log- dir=/var/log/kubernetes --v=2"

2.2.6启动

完成以上配置后,按顺序启动服务

systemctl daemon-reload

systemctl enable kube-apiserver.service systemctl start kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service systemctl enable kube-scheduler.service systemctl start kube-scheduler.service

检查每个服务的健康状态:

systemctl status kube-apiserver.service

systemctl status kube-controller-manager.service systemctl status kube-scheduler.service

2.3Node1安装

在Node1节点上,以同样的方式把从压缩包中解压出的二进制文件kubelet kube-proxy放到/usr/bin目录中。在Node1节点上需要预先安装docker,请参考Master上Docker的安装,并启动Docker

2.3.1kubelet服务

配置systemd服务文件:vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service] WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

mkdir -p /var/lib/kubelet

配置文件:vi /etc/kubernetes/kubelet

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --hostname-override=192.168.126.142 -- logtostderr=false --log-dir=/var/log/kubernetes --v=2 --fail-swap-on=false"

用于kubelet连接Master Apiserver的配置文件

vi /etc/kubernetes/kubeconfig

apiVersion: v1 kind: Config clusters: -cluster: server: http://192.168.126.140:8080 name: local contexts: -context: cluster: local name: mycontext current-context: mycontext

2.3.2kube-proxy服务

kube-proxy服务依赖于network服务,所以一定要保证network服务正常,如果network服务启动失败,常见解决方案有以下几中:

1.和 NetworkManager 服务有冲突,这个好解决,直接关闭 NetworkManger 服务就好了, service NetworkManager stop,并且禁止开机启动 chkconfig NetworkManager off 。之后重启就好了

2.和配置文件的MAC地址不匹配,这个也好解决,使用ip addr(或ifconfig)查看mac地址, 将/etc/sysconfig/network-scripts/ifcfg-xxx中的HWADDR改为查看到的mac地址

3.设定开机启动一个名为NetworkManager-wait-online服务,命令为:

systemctl enable NetworkManager-wait-online.service

4.查看/etc/sysconfig/network-scripts下,将其余无关的网卡位置文件全删掉,避免不必要的影响,即只留一个以ifcfg开头的文件

配置systemd服务文件:vi /usr/lib/systemd/system/kube-proxy.service

[Unit] Description=Kubernetes Kube-proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.service Requires=network.service [Service] EnvironmentFile=/etc/kubernetes/proxy ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS Restart=on-failure LimitNOFILE=65536 KillMode=process [Install] WantedBy=multi-user.target

配置文件:vi /etc/kubernetes/proxy

KUBE_PROXY_ARGS="--master=http://192.168.126.140:8080 --hostname-override=192.168.126.142 -- logtostderr=true --log-dir=/var/log/kubernetes --v=2"

2.3.3启动

systemctl daemon-reload systemctl enable kubelet systemctl start kubelet systemctl status kubelet systemctl enable kube-proxy systemctl start kube-proxy systemctl status kube-proxy

2.4Node2安装

请参考Node1安装,注意修改IP

2.5健康检查与示例测试

查看集群状态

查看master集群组件状态

nginx-rc.yaml

apiVersion: v1 kind: ReplicationController metadata: name: nginx spec: replicas: 3 selector: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80

kubectl create -f nginx-rc.yaml

nginx-svc.yaml

apiVersion: v1 kind: Service metadata: name: nginx spec: type: NodePort ports: - port: 80 nodePort: 33333 selector: app: nginx

kubectl create -f nginx-svc.yaml

查看pod